As I wrote here recently, I will be taking part in a roundtable discussion on media theory at this year’s FLOW Conference at the University of Texas (September 11-13, 2014). My panel — which will take place on Friday, September 12 at 1:45-3:00 pm (the full conference schedule is now online here) — consists of Drew Ayers (Northeastern University), Hunter Hargraves (Brown University), Philip Scepanski (Vassar College), Ted Friedman (Georgia State University), and myself.

In preparation for the panel, which is organized as a roundtable discussion rather than a series of paper presentations, each of us is asked to formulate a short position paper outlining our answer to an overarching discussion question. Clearly, the positions put forward in such papers are not intended to be definitive answers but provocations for further discussion. Below, I am posting my position paper, and I would be happy to receive any feedback on it that readers of the blog might care to offer.

Nonhuman Media Theories and their Human Relevance

Response to the FLOW 2014 roundtable discussion question “Theory: How Can Media Studies Make ‘The T Word’ More User-Friendly?”

Shane Denson (Leibniz Universität Hannover, Germany / Duke University)

1. Theory Between the Human and the Nonhuman

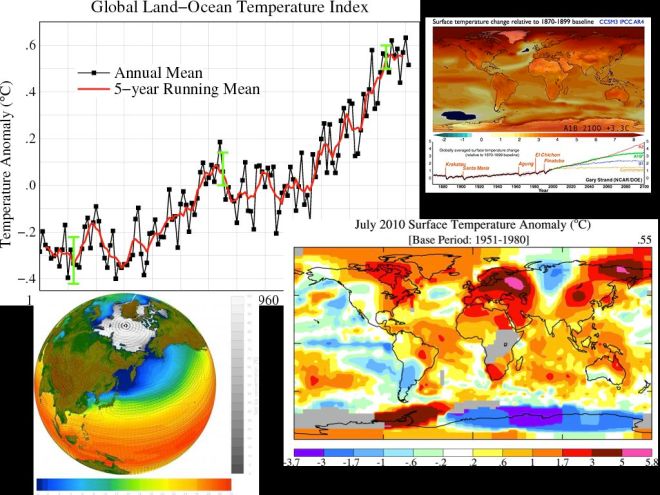

Rejecting the excesses of deconstructive “high theory,” approaches like cultural studies promised to be more down-to-earth and “user-friendly.” While hardly non-theoretical, this was “theory with a human face”; against poststructuralism’s anti-humanistic tendencies, human interaction (direct or mediated) returned to the center of inquiry. Today, however, we are faced with (medial) realities that exceed or bypass human perspectives and interests: from the microtemporal scale of computation to the global scale of climate change, our world challenges us to think beyond the human and embrace the nonhuman as an irreducible element in our experience and agency. Without returning to the old high theory, it therefore behooves us to reconcile the human and the nonhuman. Actor-network theory, affect theory, media archaeology, “German media theory,” and ecological media theory all highlight the role of the nonhuman, while their political (and hence human) relevance asserts itself in the face of very palpable crises – e.g. ecological disaster, which makes our own extinction thinkable (and generates a great variety of media activity), but also the inhuman scale and scope of global surveillance apparatuses.

2. With Friends Like These…

The roundtable discussion question asks how theory can be made more “user-friendly”; but first we should ask what this term suggests for the study of media. Significantly, the term “user-friendly” itself originates in the context of media – specifically computer systems, interfaces, and software – as late as the 1970s or early 1980s. Its appearance in that context can be seen as a response to the rapidly increasing complexity of a type of media – digital computational media – that function algorithmically rather than indexically, in a register that, unlike cinema and other analogue media, is not tuned to the sense-ratios of human perception but is designed precisely to outstrip human faculties in terms of speed and efficiency. The idea of user-friendliness implies a layer of easy, ergonomic interface that would tame these burgeoning powers and put them in the user’s control, hence empowering rather than overwhelming. As consumers, we expect our media technologies to empower us thus: they should enable rather than obstruct our purposes. But should we expect this as students of media? Should we not instead question the ideology of transparency, and the disciplining of agency it involves? Hackers have long complained about the excesses of “user-obsequious” interfaces, about “menuitis” and the paradoxical disempowerment of users through the narrow bandwidth interfaces of WIMP systems (so-called because of their reliance on “windows, icons, menus/mice, pointers”). Such criticisms challenge us to rethink our role as users – both of media and of media theory – and to adopt a more experimental attitude towards media, which are capable of shaping as much as accommodating human interests.

3. Media as Mediators

The give and take between empowerment and disempowerment highlights the situational, relational, and ultimately transformational power of media. And while cultural studies countenanced such phenomena in terms of hegemony, subversion, and resistance, the very agency of the would-be “user” of media might be open to more radical destabilization – particularly against the background of media’s digital revision, which “discorrelates” media contents (images, sounds, etc.) from human perception and calls into question the validity of a stable human perspective. More generally, it makes sense to think about media in terms of agencies and affordances rather than mere channels between pre-existing subjects and objects – to see media, in Bruno Latour’s terms, not as mere “intermediaries” but as “mediators” that generate specific, historically contingent differences between subject and object, nature and culture, human and nonhuman. Recognizing this non-neutral, lively and unpredictable, dimension of media invites an experimental attitude that not only taps creative uses of contemporary media (as in media art) but also privileges a sort of hacktivist approach to media history as non-linear, non-teleological, and non-deterministic (as in media archaeology) – and that ultimately rethinks what media are.

4. Speculative Media Theory

By expanding the notion of mediation beyond the field of discrete media apparatuses, and beyond their communicative and representational functions, approaches like Latour’s actor-network theory gesture towards a nonhuman and ultimately speculative media theory concerned with an alterior realm, beyond the phenomenology of the human (as we know it). This sort of theory accords with the aims of speculative realism, a loose philosophical orientation defined primarily by its insistence on the need to break with “correlationism,” or the anthropocentric idea according to which being (or reality) is necessarily correlated with the categories of human thought, perception, and signification. Contemporary media in particular – including the machinic automatisms of facial recognition, acoustic fingerprinting, geotracking, and related systems, as well as the aesthetic deformations of what Steven Shaviro describes as “post-cinematic” moving images – similarly problematize the correlation of media with the forms (and norms) of human perception. More generally, a speculative and non-anthropocentric perspective equips us to think about the way in which media have always served not as neutral tools but, as Mark B. N. Hansen argues, as the very “environment for life” itself.

5. Media Theory for the End of the World

Perhaps most concretely, the appeal of this perspective lies in its appropriateness to an age of heightened awareness of ecological fragility. As we begin reimagining our era under the heading of the Anthropocene – as an age in which the large-scale environmental effects of human intervention are appallingly evident but in which the extinction of the human becomes thinkable as something more than a science-fiction fantasy – our media are caught up in a myriad of relations to the nonhuman world: they mediate between representational, metabolic, geological, and philosophical dimensions of an “environment for life” undergoing life-threatening climate change. Like never before, students of media are called upon to correlate content-level messages (such as representations of extinction events) with the material infrastructures of media (like their environmental situation and impact). The Anthropocene, in short, not only elicits but demands a nonhuman media theory.