Recently, I posted a video essay exploring suture, space, and vision in a scene from Nicholas Roeg’s Don’t Look Now (1973). Following my video essay hijab.key, which experimented with dirt-cheap DIY production (namely, using Apple’s Keynote presentation software instead of the much more expensive Adobe Premiere Pro or Final Cut Pro for video authoring), the more recent video, “Don’t Look Now: Paradoxes of Suture,” was also an experiment in using splitscreen techniques for deformative purposes, intended to allow the viewer to alternate between “close” (or focused) and “distant” (or scanning, scattered) viewing modes.

What I have begun to realize, however, is that these videos are pushing at the boundaries of what a “video essay” is or can be. And I think they are doing so in a way that goes beyond the more common dissatisfactions that many of us have with the term “video essay” — namely, the questionable implications of (and formal expectations invoked by) the term “essay,” which apparently fails to describe many videographic forms that are either more poetic in nature or that indeed try to do argumentative or scholarly work, but whose arguments are less linear or explicit than those of traditional essays. These are good reasons, I think, to prefer a term like “videographic criticism” (except that “criticism” is perhaps too narrow) or “videographic scholarship” over the more common “video essay.”

But these recent pieces raise issues that go beyond such concerns, I suggest. They challenge the “videographic” part of the equation as much as, or even more than, the “essayistic” part. Take my “Don’t Look Now” video, which as I stated is designed to give the viewer the opportunity to experiment with different ways or modes of looking. By dissecting a scene into its constituent shots and laying them out in a multiscreen format, I wanted to allow the viewer to approach the scene the way one looks at a page of comics; that is, the viewer is free to zoom into a particular panel (which in this case is a looping video clip) and become immersed in the spatiotemporal relations it describes, only then to zoom back out to regard a sequence, row, or page of such panels, before zooming back in to the next one, and so on. Thus, two segments in the recent video consist simply of fifteen looping shots, laid out side by side; they are intended to give the viewer time to experiment with this other, less linear mode of looking.

But the video format itself is linear, which raises problems for any such experiment. For example, how long should such a splitscreen configuration be allowed to run? Any answer will be arbitrary. What is the duration of a page of comics? The question is not nonsensical, as cartoonists can establish rhythms and pacings that will help to determine the psychological, structural, or empirical duration of the reading experience, but this will never be an absolute and determinate value, as it is of necessity in the medium of video. That is, the linear format of video forced me to make a decision about the length of these segments, and I chose, somewhat arbitrarily, to give the viewer first a brief (30 sec.) glance at the multiscreen composition, followed later (after a more explicitly argumentative section) by a longer look (2 min. at the end of the 9 minute video). But since the whole point was to enable a non-linear viewing experience (like the non-linear experience of reading comics), any decision involving such a linearization was bound to be unsatisfactory.

One viewer commented, for example:

“I think the theory is interesting but why the lengthy stretches of multi shot with audio? Irritating to the extent that they detract from your message.”

Two important aspects come together in this comment. For one thing, the video is seen as a vehicle for a message, an argument; in short, it is regarded as an “essay.” And since the essayistic impulse combines with the video medium to impose a linear form on something intended as a non-linear and less-than-argumentative experimental setup, it is all too understandable that the “lengthy stretches” were found “irritating” and beside the point. I responded:

“For me the theory [of suture] is less interesting than the reading/viewing technique enabled by the splitscreen. I wanted to give the viewer time to make his or her own connections/alternate readings. I realize that’s at odds with the linear course of an ‘essay,’ and the length of these sections is arbitrary. In the end I may try to switch to an interactive format that would allow the viewer to decide when to move on.”

It was dawning on me, in other words, that by transforming the Keynote presentation into a video essay (using screen recording software), I had indeed found an interesting alternative to expensive video authoring software (which might be particularly valuable for students and other people lacking in funding or institutional support); at the same time, however, I was unduly amputating the interactive affordances of the medium that I was working in. If I wanted to encourage a truly experimental form of vision, then I would need to take advantage of precisely these interactive capabilities.

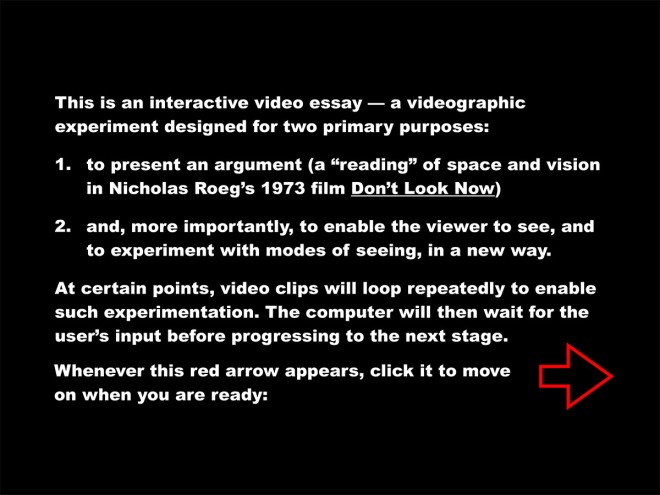

Basically, a Keynote file (like a PowerPoint presentation) is already an interactive file. Usually the interactivity is restricted to the rudimentary “click-to-proceed-to-the-next-slide” variety, but more complex and interesting forms of interaction (or automation) can be programmed in as well. In this case, I set up the presentation in such a way as to time certain events (making the automatic move from one slide to another a more “cinematic” sequence, for example), while waiting for user input for others (for example, giving the user the time to experiment with the splitscreen setup for as long as they like before moving on). You can download the autoplaying Keynote file (124 MB) here (or by clicking on the icon below) to see for yourself.

Of course, only users of Apple computers will be able to view the Keynote file, which is a serious limitation; ideally, an interactive video essay (or whatever we decide to call it) will not only be platform agnostic but also accessible online. Interestingly, Keynote offers the option to export your slideshow to HTML. The export is a bit buggy (see “known bugs” below), but with some tinkering you can get some decent results. Click here to see a web-based version of the same interactive piece. (Again, however, note the “known bugs” below.)

In any case, this is just a first foray. Keynote is probably not the ultimate tool for this kind of work, and I am actively exploring alternatives at the moment. But it is interesting, to say the least, to test the limits of the software for the purposes of web authoring (hint: the limits are many, and there is constant need for workarounds). It might especially be of interest to those without any web design experience, or in cases where you want to quickly put together a prototype of an interactive “essay” — but ultimately, we will need to move on to more sophisticated tools and platforms.

I am interested, finally — and foremost — in getting some feedback on what is working and what’s not in this experiment. I am interested both in technical glitches and in suggestions for making the experience of interacting with the piece more effective and engaging. In addition to the Keynote file and the online version, you can also download the complete HTML package as a single .zip file (66 MB), which will likely run smoother on your machine and also allow you to dissect the HTML and Javascript if you’re so inclined.

However you access the piece, please leave a comment if you notice bugs or have any suggestions!

Known bugs, limitations, and workarounds:

- Keynote file only runs on Mac (workaround: access HTML version)

- Buggy browser support for HTML version:

- Horrible font rendering in Firefox (“Arial Black” rendered as serif font)

- Offline playback not supported in Google Chrome

- Best support on Apple Safari (Internet Explorer not tested)

- Haven’t yet found the sweet spot for video compression (loading times may be too long for smooth online playback)